The Dawn of Artificial General Intelligence

What happens when general-purpose AI reaches a level of capability that rivals—or even surpasses—our own?

In their groundbreaking paper “An Approach to Technical AGI Safety and Security,” a prominent team from Google DeepMind—including Anca Dragan, Rohin Shah, Four Flynn, and Shane Legg—unveils their strategic framework for the responsible development of artificial general intelligence (AGI). This next frontier in AI development represents systems capable of matching or exceeding human cognitive abilities across a diverse spectrum of tasks.

Balancing Promise and Peril

The DeepMind team approaches AGI with a dual perspective: optimism for its transformative potential alongside rigorous caution regarding its risks. AGI promises remarkable advancements from enhanced medical diagnostics and personalized education to strengthened cybersecurity. However, these benefits come with profound challenges, from potential misuse to fundamental misalignment with human values.

Four Pillars of AGI Risk Management

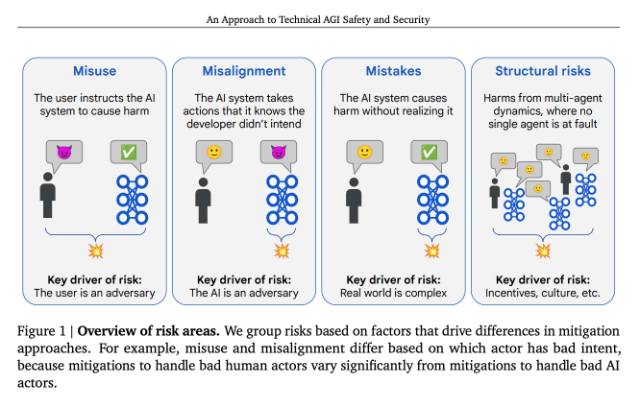

DeepMind’s research identifies four critical risk domains that require comprehensive attention:

- Misuse Prevention: Protecting against deliberate exploitation of AGI capabilities

- Alignment Assurance: Ensuring AGI systems operate in accordance with human values and intentions

- Accident Mitigation: Preventing unintended consequences from powerful autonomous systems

- Societal Impact Assessment: Addressing broader implications for society, economy, and human well-being

Building Technical Safeguards from the Ground Up

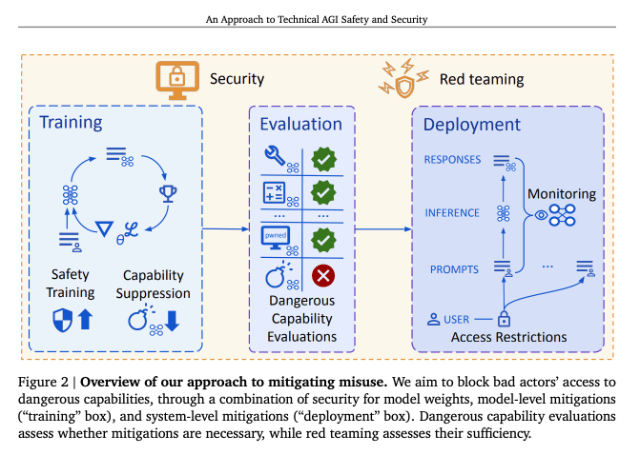

DeepMind’s proactive approach to AGI safety encompasses multiple defensive layers:

- Access Control Architecture: Implementing robust restrictions on system capabilities

- Scenario Simulation: Testing AGI responses across diverse and challenging situations

- Human Feedback Integration: Refining training methodologies through continuous human guidance

- Uncertainty-Aware Behavior: Developing systems that recognize their limitations and proceed with appropriate caution

Transparency Through Advanced Interpretability

Understanding the reasoning behind AGI decisions remains a cornerstone of DeepMind’s safety approach. Their interpretability tools, including the pioneering MONA system, provide crucial visibility into the decision-making processes of increasingly complex AI systems.

Continuous Evaluation and Independent Oversight

Rather than relying solely on internal assessments, DeepMind has established:

- Regular comprehensive safety evaluations

- Open channels for independent expert input

- Rigorous stress-testing protocols that evolve alongside the technology

An Evolving Framework for Unprecedented Challenges

DeepMind’s paper doesn’t present AGI safety as a solved problem. Instead, it offers a dynamic framework designed to adapt as AGI development progresses. This approach recognizes that responsible AGI creation requires not just anticipating potential failures but building systems resilient enough to manage unforeseen challenges.

The Path Forward: Building with Unprecedented Care

For those following AGI development, this paper provides valuable insight into how one of AI’s leading research laboratories approaches responsibility at an unprecedented scale. DeepMind neither minimizes the complexity of AGI safety nor overstates current capabilities. Instead, it poses the essential question: How do we develop transformative technology with sufficient care when the stakes involve humanity’s future?

Interesting AI Highlights

Is Hacking’s Future Agentic?

By Rhiannon Williams | MIT Technology Review

AI agents are rapidly advancing in intelligence and independence—and cybersecurity experts are sounding the alarm. These next-gen tools go beyond simple bots: they can adapt, strategize, and carry out cyberattacks with striking precision and scale. One initiative, the LLM Agent Honeypot, is already monitoring these AI-powered threats in real time. According to researchers, it’s not a question of if cybercriminals will begin using agents to hack—it’s when. And we need to be prepared.

AI tools to try

- Beautiful AI – Instantly design stunning presentations with AI.

- Cove Apps – Visual workspace—you can build custom AI-powered interfaces.

- ElevenLabs – Just launched a text-to-bark model for dogs.

Leave a comment